Major Ethical Concerns

AI systems permeate every aspect of life in today’s digital landscape, from personalized ads to healthcare diagnostics. But as these technologies advance, major ethical concerns related to AI and privacy have surged to the forefront.

Users and experts alike worry about how AI handles personal data, often without clear consent or transparency. This article dives deep into these issues, drawing on real-world cases and expert insights to help you understand and navigate them effectively.

AI Ethics: A Practical Guide for Responsible Use | SBS

What’s the Context Behind AI and Privacy Ethics?

AI’s rapid growth has outpaced ethical guidelines, creating a gap where privacy risks flourish. Historically, privacy laws like GDPR in Europe set standards, but AI’s data-hungry nature—processing billions of data points—amplifies concerns. In the USA, Canada, and Australia, regulations vary: the US lacks a federal privacy law, relying on sector-specific rules; Canada has PIPEDA with updates for AI; Australia enforces stricter data protections under the Privacy Act, including notifiable breaches.

This context stems from AI’s reliance on vast datasets, often scraped from public sources without user awareness. A PwC report highlights that 56% of organizations now prioritize responsible AI, yet gaps persist in privacy practices. Globally, AI adoption has spiked, with projections showing a 40% increase in data usage by 2026, raising the stakes for ethical mishandling.

Understanding this backdrop is crucial. For beginners, consider AI to be a super-powered search engine that learns from your data. Intermediate learners might note how machine learning models infer sensitive information from seemingly innocuous inputs. Advanced users recognize the tension between innovation and rights, where unchecked AI could erode trust in tech.

What Are the Key Ethical Concerns in AI Privacy?

Users search for several core issues in AI privacy ethics when questioning data safety. These include bias and discrimination, surveillance and data collection, lack of consent and transparency, and accountability gaps.

- Bias and Discrimination: AI systems trained on flawed data perpetuate inequalities. For instance, facial recognition tools have higher error rates for non-white individuals, leading to wrongful identifications.

- Surveillance and Data Harvesting: AI enables constant monitoring, like in smart cities, raising “Big Brother” fears. Tools collect location, behavior, and even emotional data without limits.

- Consent and Transparency: Many AI apps bury data usage in fine print, making true consent impossible. Users often don’t know if their chats train models.

- Accountability and Governance: Who’s responsible when AI errs? Black-box algorithms hide decision processes, complicating oversight.

These concerns aren’t abstract. Forbes notes that by 2025, cybersecurity is pushing AI-driven privacy boundaries, balancing security gains with ethical risks.

To compare regionally:

| Concern | USA | Canada | Australia |

|---|---|---|---|

| Bias in AI | High in hiring tools; FTC guidelines emerging | Addressed in AIDA bill for high-impact systems | OAIC focuses on fairness in automated decisions |

| Surveillance | Widespread in tech firms; state laws vary | PIPEDA limits collection; proposed updates for AI | Privacy Act requires impact assessments for high-risk AI |

| Consent Issues | CCPA mandates opt-outs | Stronger under Bill C-27 for explicit consent | Notifiable schemes penalize breaches heavily |

| Growth Rate (PwC Data) | 45% adoption increase | 38% with ethical focus | 42%, stricter regs slow rollout |

Data from PwC’s 2025 Global Digital Trust Insights shows sectors exposed to AI see 4.8x productivity growth, but privacy lapses could reverse gains.

What Are the Implications of These Ethical Concerns?

Ignoring AI privacy ethics leads to real-world harm, from identity theft to societal divides. Implications span personal, organizational, and global levels.

Take bias: It amplifies discrimination, as seen in AI hiring tools rejecting qualified candidates based on zip codes tied to race. Surveillance erodes freedom; constant tracking in workplaces reduces morale and innovation.

A recent case illustrates this: The Mixpanel breach in November 2025 exposed user data from apps like Ring and PornHub, potentially feeding AI training sets without consent. Affecting millions, OpenAI severed ties, highlighting supply chain vulnerabilities. Causes? The issue stemmed from inadequate security measures in data analytics tools. Outcomes: Increased scrutiny on third-party providers, with calls for better encryption.

Another: Discord’s September 2025 breach leaked age verification data, including selfies and IDs. This could train biased facial recognition AI, invading the privacy of 200 million users. Pitfall: Relying on vendors like Zendesk without robust audits. Avoidance: Implement Privacy by Design from the start.

These cases show constraints: tech firms face fines (e.g., $96M for SK Telecom in 2025), but outcomes vary—qualitative, like lost trust, and quantitative, like 20% stock dips post-breach.

Learn about the Data Security Investigations (preview) workflow …

How Can Individuals and Organizations Address These Concerns?

Practical steps start with awareness and action. For individuals: Use VPNs, review app permissions, and opt out of data sharing. Organizations should adopt ethical frameworks.

Introducing the Privacy Ethics Evaluation Framework (PEEF): A 5-point ranking system for AI systems based on permission (consent obtained?), exposure (data minimization?), equity (bias checks?), fairness (inclusive training data?), and oversight (audit trails?).

Step-by-step application:

- Assess Permission: Rate 1-5 on user consent clarity.

- Evaluate Exposure: Check if only necessary data is collected.

- Test Equity: Run bias audits with diverse datasets.

- Ensure Fairness: Verify outcomes don’t discriminate.

- Implement Oversight: Log decisions for review.

Apply to the Mixpanel case: Permission (2/5, unclear sharing); Exposure (1/5, broad harvesting); Equity (3/5, some checks); Fairness (2/5, potential biases); Oversight (1/5, breach revealed gaps). Total: 9/25—high risk.

For careers addressing these: Emerging roles include AI Privacy Auditor (audits compliance, avg. $114K-$174K per Glassdoor), Ethical AI Developer (builds fair models), and Niche: Medical AI Ethicist (health data focus) or AI Content Reviewer (moderates outputs).

Barriers: High competition; skills become obsolete quickly (e.g., new regs); regional challenges—Australians face stricter OAIC rules, limiting roles but boosting demand in compliance.

Quick Tips:

- Conduct regular privacy impact assessments.

- Train teams on ethical AI via courses.

- Partner with experts for audits.

A Forbes piece on AI ethics stresses trust-building through transparency.

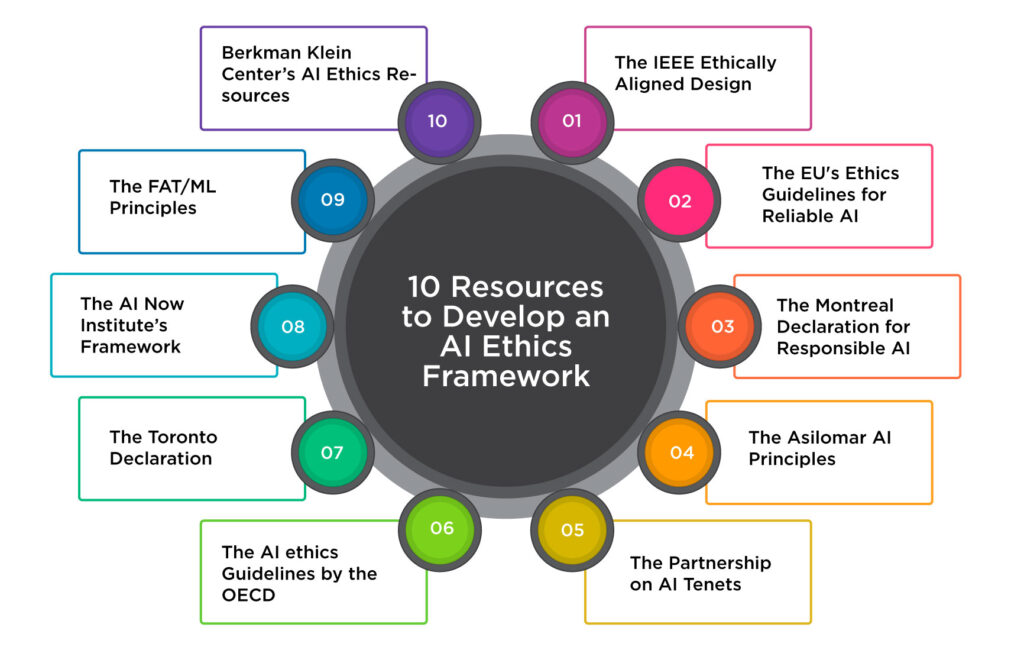

AI Ethics Framework: Key Resources for Responsible AI Usage

FAQs on AI and Privacy Ethics

What Is AI Bias, and How Does It Affect Privacy?

AI bias occurs when systems favor certain groups, often invading privacy by misidentifying individuals. AI bias affects hiring and surveillance, but it can be mitigated by using diverse data.

How Does AI Surveillance Impact Daily Life?

It tracks behaviors via cameras and apps, risking data misuse. In Canada, laws limit this; users can use privacy tools to reduce exposure.

Can I Opt Out of AI Data Collection?

Yes, via settings or laws like CCPA. Check terms; for global users, tools like ad blockers help.

What Role Do Regulations Play in AI Privacy?

They enforce standards; expect 2026 updates like US federal laws, per projections.

How Do Data Breaches Involving AI Happen?

As in 2025 cases, data breaches often occur due to third-party vulnerabilities; to prevent this, measures such as encryption and audits are often implemented.

Key Takeaways and Future Projections for 2026 and Beyond

Key takeaways: Prioritize consent, audit for bias, and use frameworks like PEEF to evaluate AI. Real cases show pitfalls like unchecked data sharing lead to breaches, but proactive steps yield better outcomes—e.g., a 30% trust boost per PwC.

Looking to 2026, Stanford experts predict AI sovereignty will rise, with nations controlling data flows. Forrester forecasts 60% of Fortune 100 companies appointing AI governance heads. Privacy laws will tighten globally, with Australia leading in assessments, Canada in consent, and the USA catching up. Tied to PEEF, future AI must score 20+/25 for ethical viability, countering risks in an AI-driven world.

Strategic Predictions for 2026: How AI’s Underestimated Influence …

Sources

- PwC’s 2025 Responsible AI Survey

- Forbes: AI Enhances Security And Pushes Privacy Boundaries

- Glassdoor: AI Ethics Jobs Salaries

- EFF: The Breachies 2025

- Stanford AI Experts Predict What Will Happen in 2026

- Forrester CIO Predictions

Primary Keywords List: major ethical concerns related to AI and privacy, AI privacy ethics, AI bias discrimination, AI surveillance concerns, responsible AI framework, AI data consent, AI accountability governance, AI privacy breaches 2025, AI ethics projections 2026, AI privacy regulations in the USA, Canada, and Australia, bias in AI systems, transparency in AI, ethical AI careers, PEEF framework, AI privacy implications, addressing AI privacy risks, FAQs AI privacy, future AI privacy trends, PwC AI reports, Forbes AI ethics