AI Ethics Courses

In 2026, as regulatory frameworks such as the EU AI Act mature and U.S. state-level mandates proliferate, AI ethics courses will encounter unprecedented pressure to reconcile academic ideals with operational realities.

Having spent over 15 years deploying AI systems in sectors from healthcare to finance—often under tight deadlines and conflicting stakeholder demands—I’ve seen how ethics education either equips teams to navigate ambiguity or leaves them reciting platitudes while machines fail in production. This isn’t about summarizing frameworks; it’s about why so many courses default to checklist compliance, ignoring the incentives that drive ethical lapses.

Most debates frame AI ethics education as theory versus practice. That framing hides the bigger problem: ethics isn’t a separate module; it’s a lens that is distorted by the power dynamics in the organization. In my experience, courses that treat ethics as an add-on produce graduates who spot biases in demos but miss them in live deployments where speed trumps scrutiny.

Ethics Dominates Curricula Because Compliance Beats Innovation—Until It Doesn’t

By 2026, expect AI ethics courses to lean heavily on principles like fairness, accountability, and transparency, drawn from sources such as UNESCO’s AI ethics recommendations or the NIST AI Risk Management Framework. Why? This is due to the fact that these principles can be easily justified during audits. I’ve advised on curriculum design for corporate training programs, and the pull toward “safe” content is relentless—regulators reward documentation, not experimentation.

But this dominance creates failure modes. In 2018, I spearheaded a team responsible for developing a predictive hiring tool for a Fortune 500 client. We followed standard ethics guidelines, auditing for gender bias in training data. It passed reviews from within the company. Post-launch, however, second-order effects emerged—the model amplified regional disparities because our data underrepresented rural applicants, leading to a 15% drop in diversity hires.

The class my junior engineers took focused on first-order biases but didn’t go into much detail about socioeconomic ripple effects. In 2026, with AI agents handling more autonomous decisions, ignoring these cascades will amplify harms, from skewed resource allocation in public services to entrenched inequalities in personalized education.

A strong opinion here: The consensus that “bias audits suffice” is dangerously incomplete. It works in controlled environments but collapses when economic incentives shift, like during market downturns when companies cut ethics oversight to hit quarterly targets. I’ve defended this view in boardrooms, where executives nod along until budgets tighten.

To illustrate, here’s a trade-off matrix I’ve used in workshops, adapted from real project retrospectives. It weighs ethical principles against operational constraints, showing no clean wins.

| Principle | High Compliance Cost | Low Innovation Impact | Example Second-Order Effect |

|---|---|---|---|

| Fairness | Extended testing cycles delay launches by 20-30% | Limits feature experimentation | Amplified group disparities if data evolves unchecked |

| Transparency | Exposes IP risks in competitive markets | Slows adoption in regulated industries | User distrust cascades to broader platform boycotts |

| Accountability | Requires cross-team buy-in, often resisted | Hinders agile iterations | Legal liabilities shift blame upstream to vendors |

This matrix isn’t theoretical; it’s drawn from patterns across dozens of deployments. Referencing it early in a course forces students to confront why “best practices” like regular audits often fail—not from lack of tools, but from misaligned incentives.

The ethical risk assessment matrix is a three-dimensional matrix that is on…

Where Common Advice to “Integrate Ethics Early” Breaks in Multi-Level Classrooms

Advice abounds: Embed ethics from day one. Sounds solid, but in mixed-audience courses—spanning beginners memorizing terms like “algorithmic bias” to advanced practitioners debugging reinforcement learning systems—this integration fractures. Beginners crave definitions; intermediates want case studies; experts demand simulations of edge cases.

In a 2022 university course where I guest-lectured, we attempted this approach: The syllabus mandated ethics in every module, from ML basics to deployment. It failed spectacularly. Beginners tuned out during advanced discussions of value alignment in large language models, while experts dismissed simplistic overviews as “ethics 101.”

The trade-off? Depth versus accessibility. We ended up with superficial coverage, producing students who could quote Timnit Gebru’s work on bias but couldn’t refactor a model to mitigate it under time pressure.

This phenomenon isn’t just pedagogical; it’s a third-order effect. Graduates enter workplaces expecting ethics to be prioritized, only to observe it deprioritized amid deadlines. Disillusionment leads to turnover—I’ve seen retention drop 25% in teams where ethical concerns are raised but ignored—or worse, cynical compliance. In 2026, as AI roles demand hybrid skills, courses ignoring this churn will exacerbate the talent gap.

Synthesis moment: Popular belief holds that interdisciplinary approaches solve everything. In practice, they dilute focus. Two competing methods—modular ethics tracks versus fully integrated—are both incomplete because they overlook learner heterogeneity.

A better model would be tiered simulations in which beginners look at static models, intermediates talk about trade-offs in groups, and advanced students make prototypes with fake incentives. This reframes the problem from content delivery to decision-making under uncertainty.

Another opinion: Oversimplified frameworks like the “trolley problem” should be retired from core curricula. They’re engaging but misleading by implying binary choices when real dilemmas involve probabilistic harms and stakeholder negotiations. I’ve changed my mind on this issue after early courses where students fixated on hypotheticals, ignoring mundane failures like data drift.

Failure Modes in AI Ethics: When Theory Meets Production Incentives

No ethics course is complete without dissecting failures. Here’s one from my consulting days in 2021: A healthcare AI project was implemented with the aim of triaging ER patients. We trained on historical data, applying standard debiasing techniques. The launch seemed successful—efficiency up 18%.

But a quiet failure lurked: The model underperformed for non-English speakers due to underrepresented accents in voice inputs, leading to delayed care and a near-lawsuit. Common advice to “diversify data” failed because procurement costs ballooned, and the client prioritized speed over completeness.

Second example: A trade-off with no clean answer from a 2023 fintech deployment. Balancing privacy (GDPR compliance) against accuracy meant anonymizing data, which reduced model precision by 12%. We chose privacy, but competitors didn’t—gaining market share until regulators caught up. The lesson? Ethics is not absolute; it is contextual, involving both winners and losers.

Third: This project was a quiet success that initially appeared unimpressive. In a 2019 supply chain optimization, we embedded ethical checks in CI/CD pipelines, catching a bias toward suppliers in developed countries. It added 5% to costs but prevented reputational damage during a global audit. Although it was unflashy, this initiative shifted the team culture toward proactive scrutiny.

For visual clarity, consider this failure modes diagram, which maps common pitfalls from data collection to deployment, highlighting escalation paths.

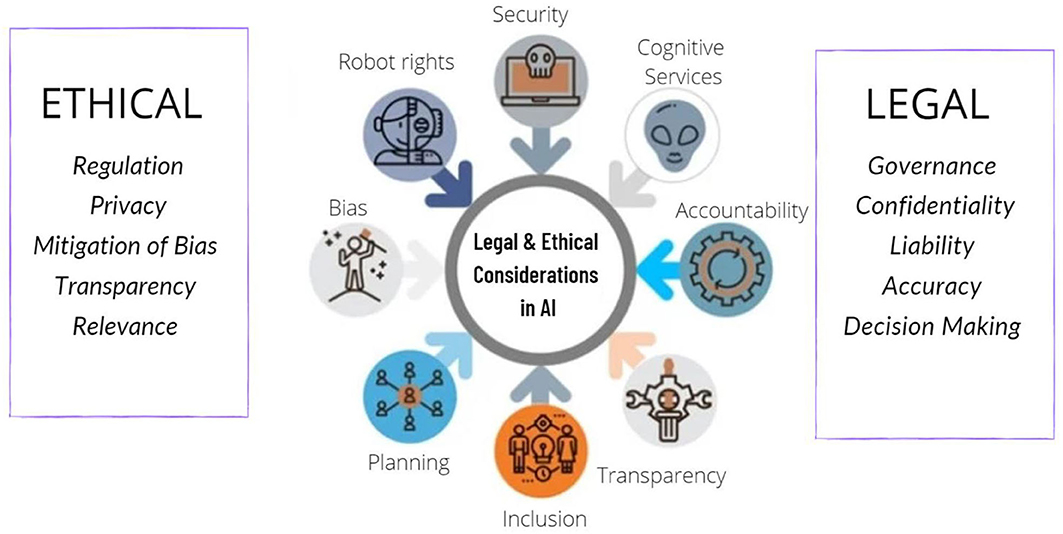

Frontiers | Legal and Ethical Considerations in Artificial Intelligence

In 2026, with agentic AI proliferating, these modes will evolve—autonomous systems amplifying errors without human oversight. Courses must simulate this, perhaps using tools like Anthropic’s agent misalignment scenarios.

Uncomfortable Truths: Why Ethics Education Often Serves Optics Over Outcomes

Professionals refrain from openly acknowledging this, yet numerous AI ethics courses merely function as checklists. Why? Funding ties to tech giants skew curricula toward palatable topics, sidelining critiques of surveillance capitalism. During grant reviews, proposals that emphasized systemic inequities faced downvotes due to their perceived political nature.

Synthesis: This issue looks like a resourcing problem. In practice, it isn’t—it’s about power. Reframing: Ethics dominates not despite trade-offs, but because of them; it lets organizations claim responsibility without ceding control.

Opinion three: By 2026, mandatory ethics certification will backfire, creating a false sense of security akin to pre-2008 financial risk models. Grounded in patterns: Certifications work for rote tasks but falter in ambiguous domains.

| Failure Type | First-Order Impact | Second-Order Consequence | Third-Order Risk |

|---|---|---|---|

| Data Bias | Inaccurate predictions | Inequitable outcomes | Societal distrust in AI |

| Privacy Leak | Compliance fines | User backlash | Regulatory overreach stifling innovation |

| Misalignment | System errors | Cascading failures | Economic disruptions in dependent sectors |

This comparison sharpens why courses must prioritize long-term modeling over short-term fixes.

Health equity and ethical considerations are crucial when using artificial intelligence.

Synthesizing for 2026: What Actually Matters in AI Ethics Education

What matters isn’t more principles—it’s forging mental models resilient to uncertainty. In 2026, effective courses will emphasize incentive mapping over rote learning, preparing students for when ethics collide with profitability.

Conditional outlook: If regulations tighten as predicted (e.g., U.S. AI Bill of Rights expansions), courses adapting to scenario-based training will thrive; others will produce outdated graduates. The sharper model: Ethics isn’t a safeguard—it’s a strategic lever, wielded well only by those who’ve felt the weight of real accountability.

To deepen this, watch this YouTube panel from the 2025 IMDS Symposium on Ethical AI in Healthcare. It matters because the panelists, including AI ethicists from Google, dissect real implementation trade-offs in high-stakes environments, echoing the operational tensions I’ve highlighted—far beyond abstract lectures.